Bibblio is now a part of EX.CO

EX.CO - The Experience Company, the world’s leading content experience platform, powering billions of personalized interactions around the web, has acquired Bibblio with the view to integrate our AI-driven recommendation and personalization capabilities into their world-class experience solutions.

Tom Pachys, co-founder and CEO at EX.CO, said “This acquisition allows us to scale our technology and propel businesses into a new era of hyper-personalization so they can easily tailor their digital properties with dynamic content to create unique experiences.”

Meet EX.CO

Founded in 2012, Walt Disney-backed EX.CO reimagines how brands, publishers, and e-commerce businesses engage with their audiences across their digital properties to drive meaningful growth. EX.CO’s always-on, codeless, dynamic experiences are trusted by clients such as Audi, Hearst, Nasdaq, Sky News, ViacomCBS, VICE, and Ziff Davis.

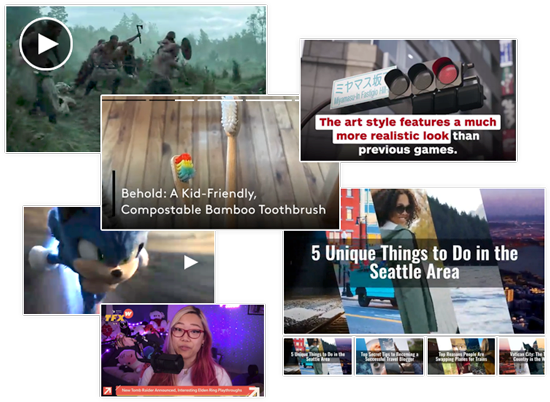

EX.CO for Publishers

Publishers can access a full self-serve video stack — from content management to ad serving and monetization — along with a suite of solutions to drive first-party data collection and subscriptions.

Trusted by over 500 of the world's leading publishing titles, use these fully customizable formats and placements to quickly deliver the results you crave.